Designing a Conversational AI Banking Assistant with Amazon Lex & AWS Lambda

Traditional customer service models in banking can often be slow and inefficient. Customers typically have to navigate complex phone menus or wait for a live agent to perform simple tasks such as checking their account balance or transferring funds. This presents an opportunity to design and implement a conversational AI solution that automates these routine tasks. Such a solution would provide customers with a fast, natural, and secure experience, while also allowing human agents to focus on more complex inquiries. This project showcases a comprehensive understanding of how to build a functional and scalable AI chatbot from the ground up.

My Role

As the designer and implementer of this project, I was responsible for the entire lifecycle of the conversational interface. My role involved defining user intents and creating conversational flows, as well as implementing a robust technical solution using multiple AWS services. This included:

Conversational Flow Design: Designing natural, efficient conversation paths for multiple intents.

Technical Implementation: Building the chatbot and its components in Amazon Lex.

Logic & Integration: Connecting the chatbot to AWS Lambda functions to handle complex tasks.

Deployment Strategy: Using CloudFormation to ensure the solution was scalable and easily deployable.

The Solution: A Phased Technical Approach

My strategy involved a modular, phased development approach for creating a robust conversational banking bot, illustrating how complex problems can be broken down into manageable, strategic steps.

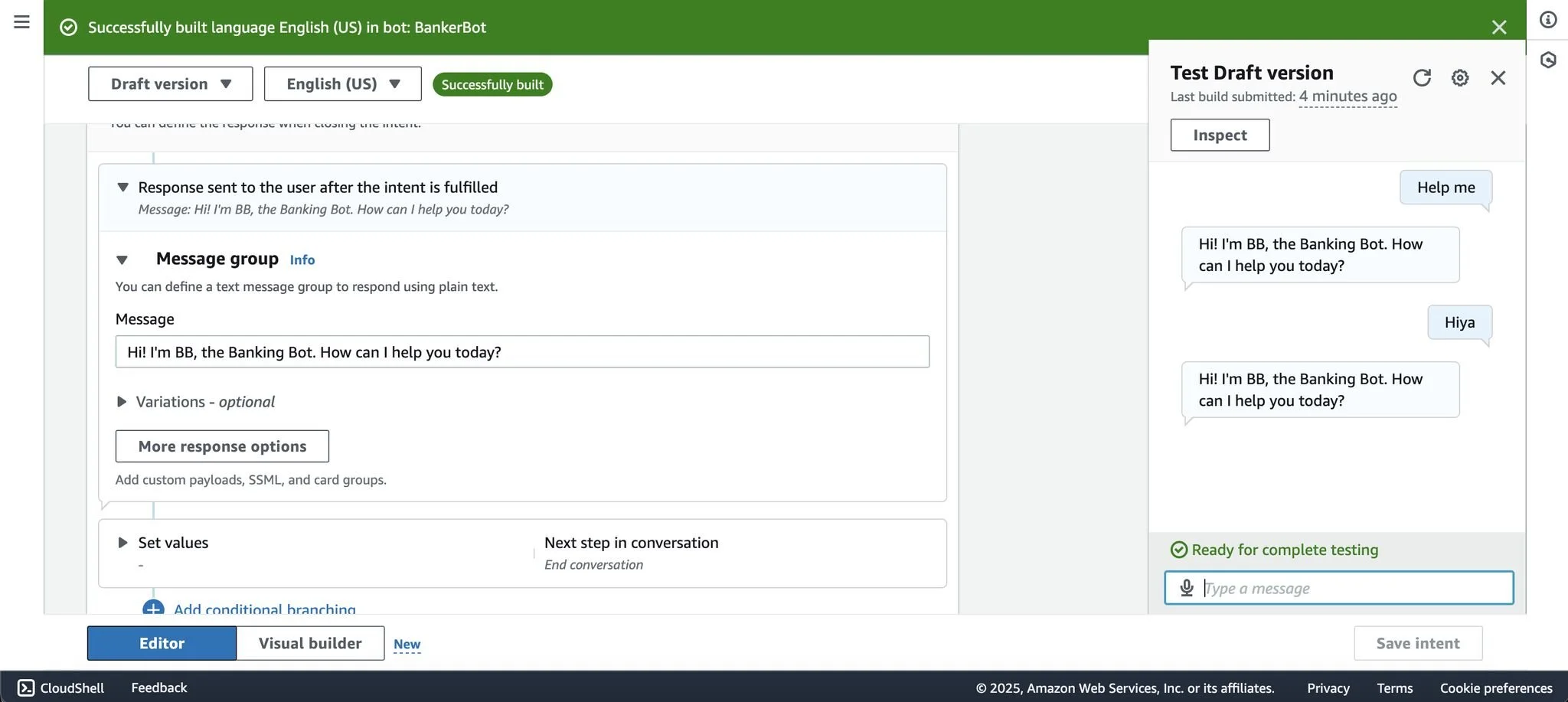

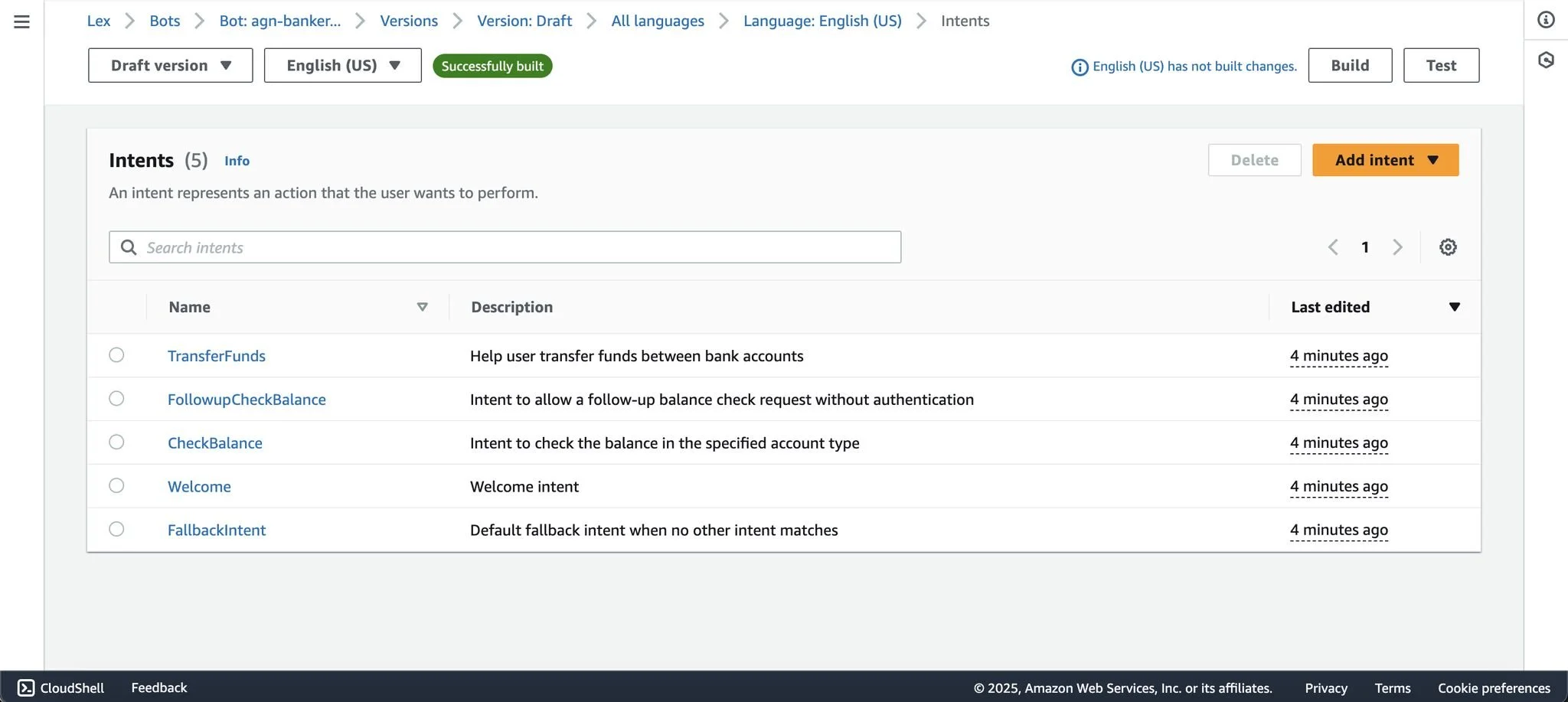

Phase 1: Foundation & Core Intents

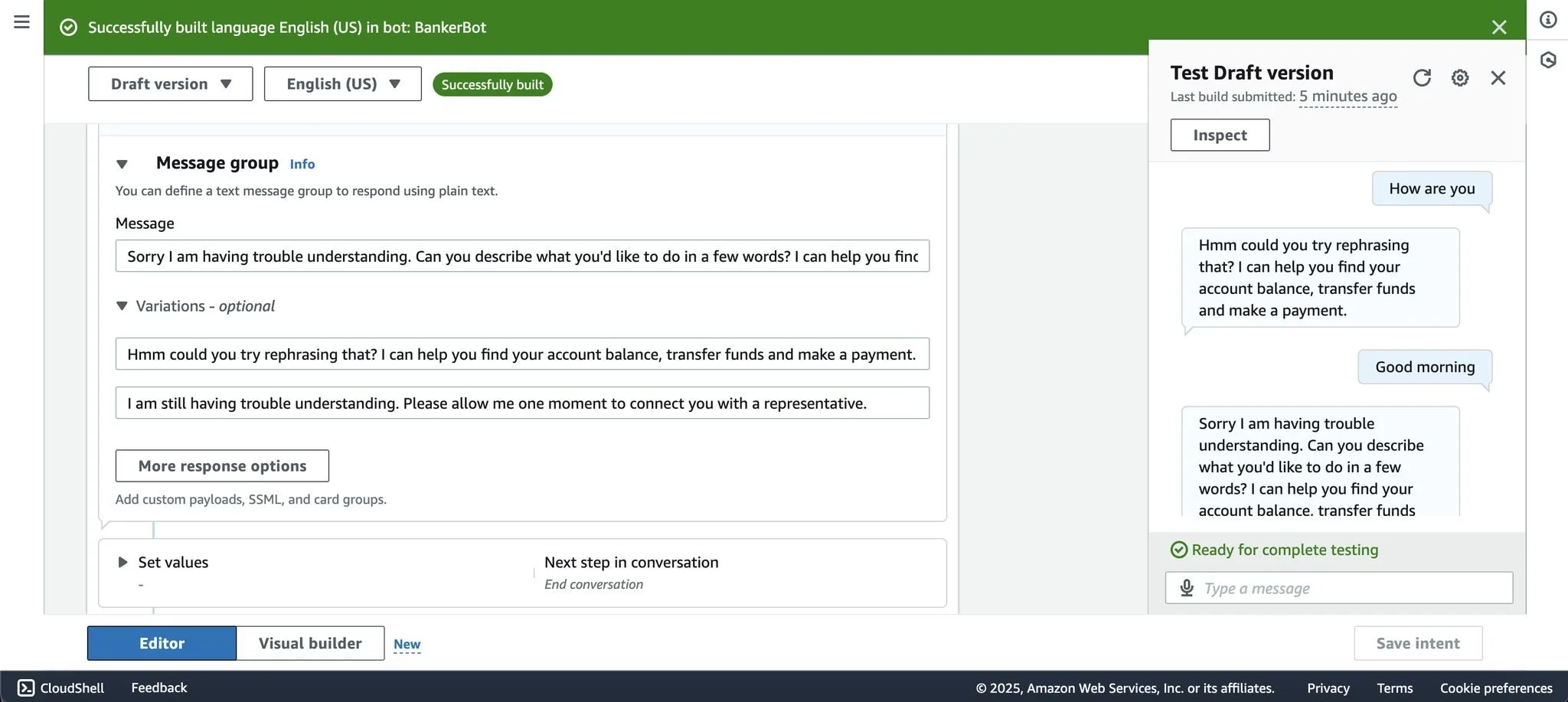

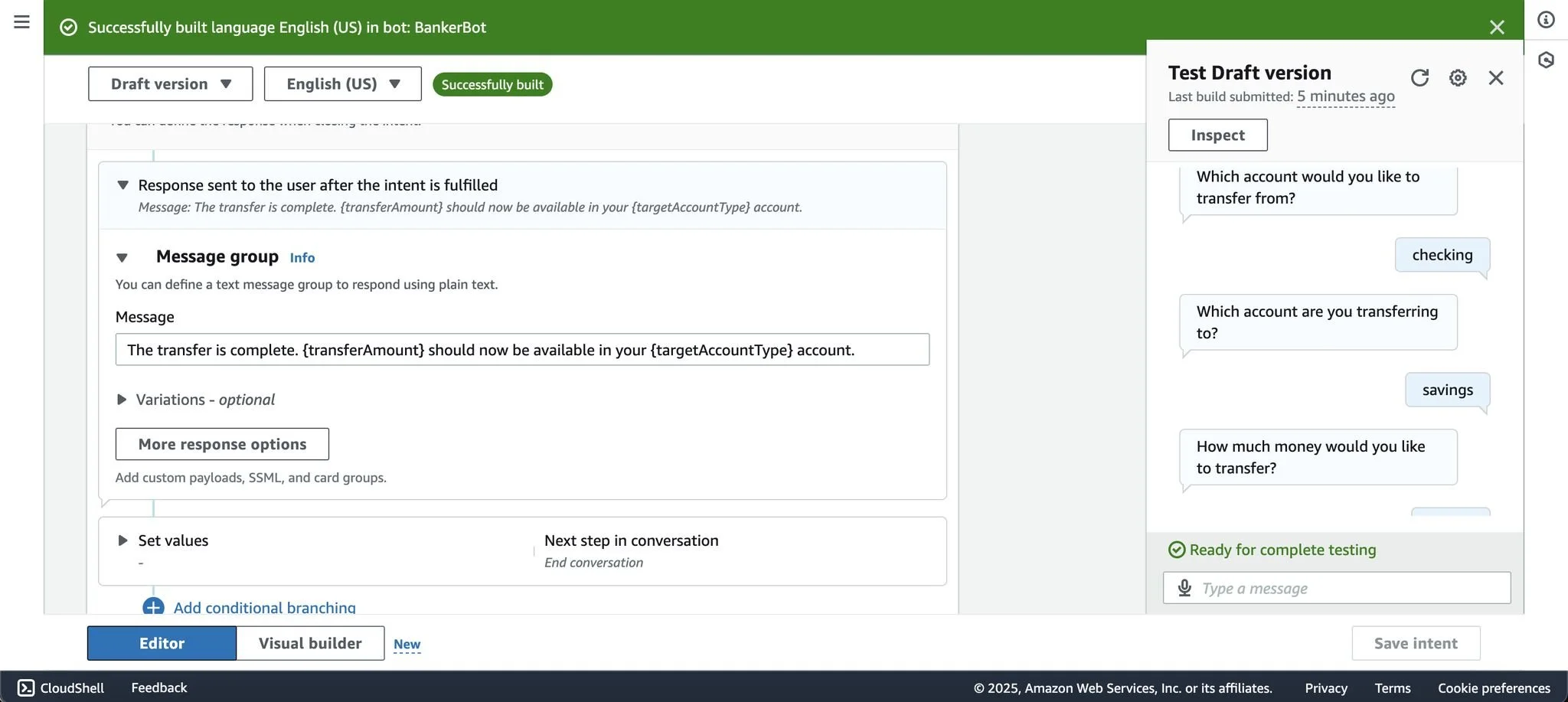

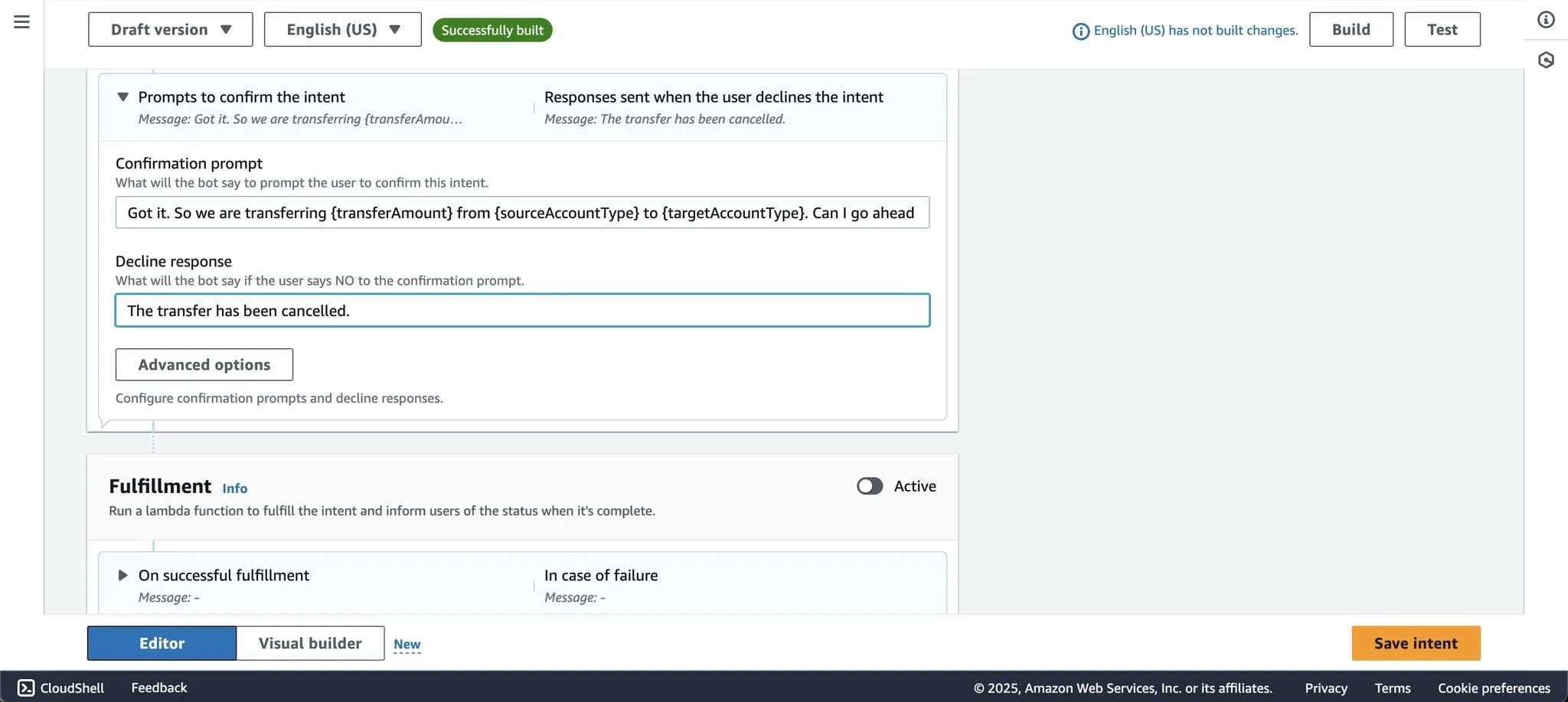

The project began by building the chatbot with the core conversational interfaces. I defined the key intents the chatbot would handle, including CheckBalance and TransferFunds, establishing the foundational user actions. I also created a FallbackIntent to gracefully handle user requests that the bot couldn't understand to ensure a better user experience by providing helpful suggestions.

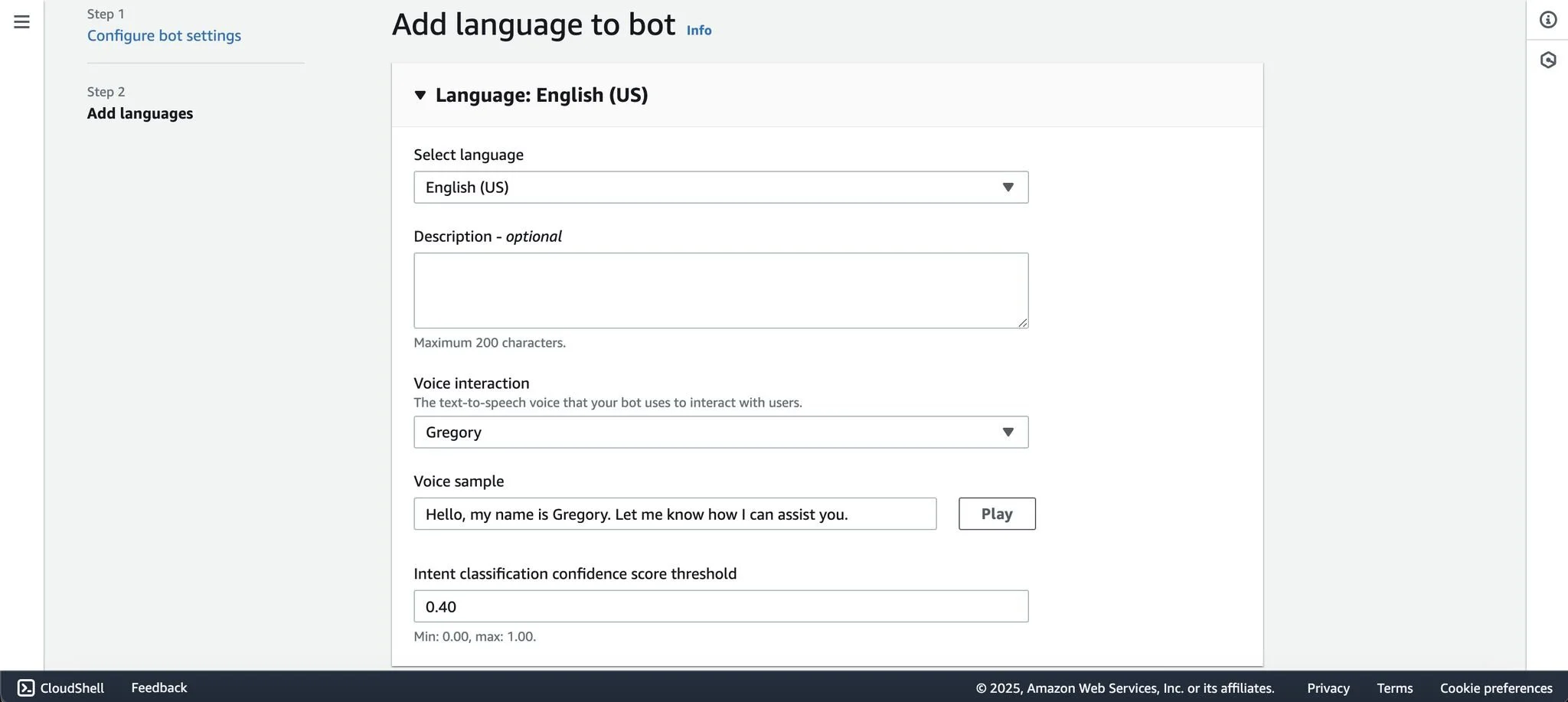

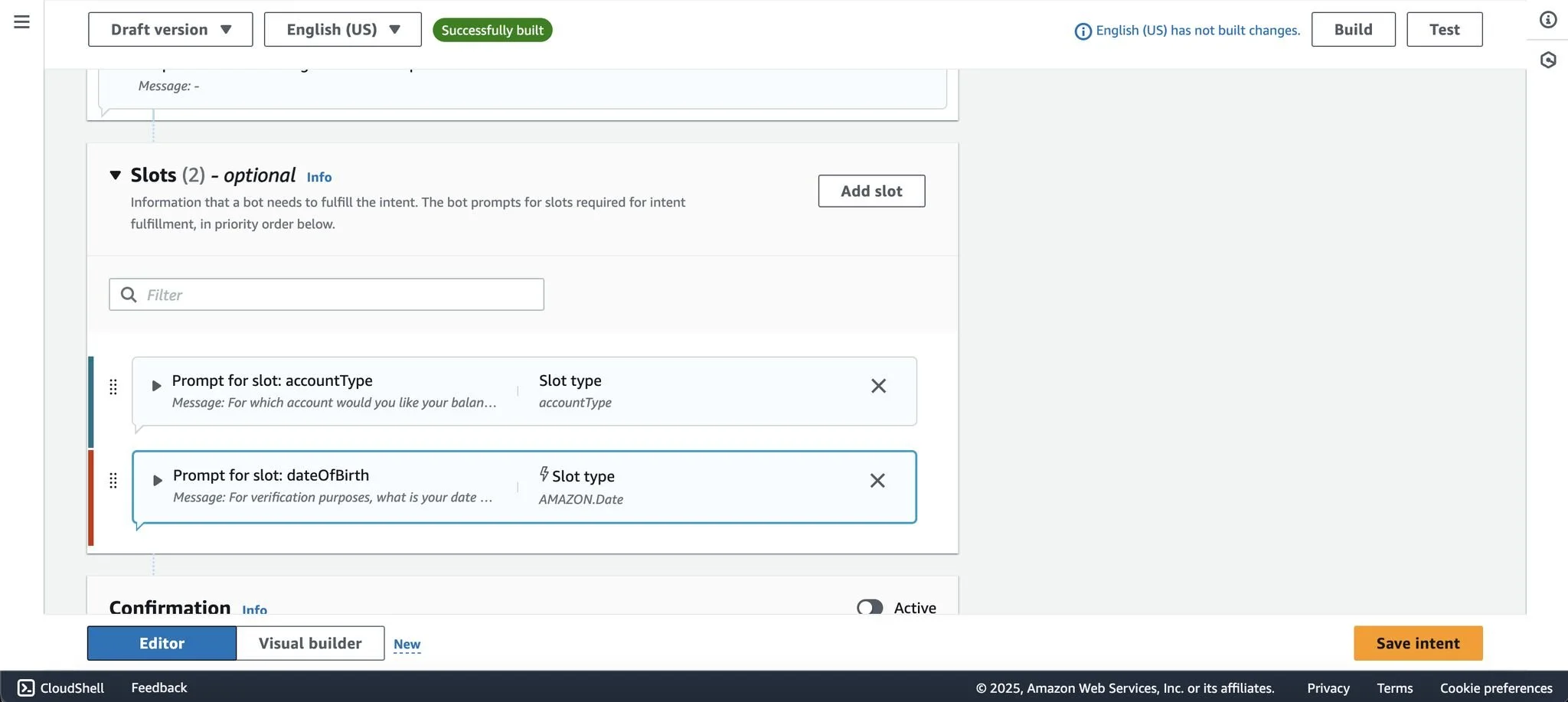

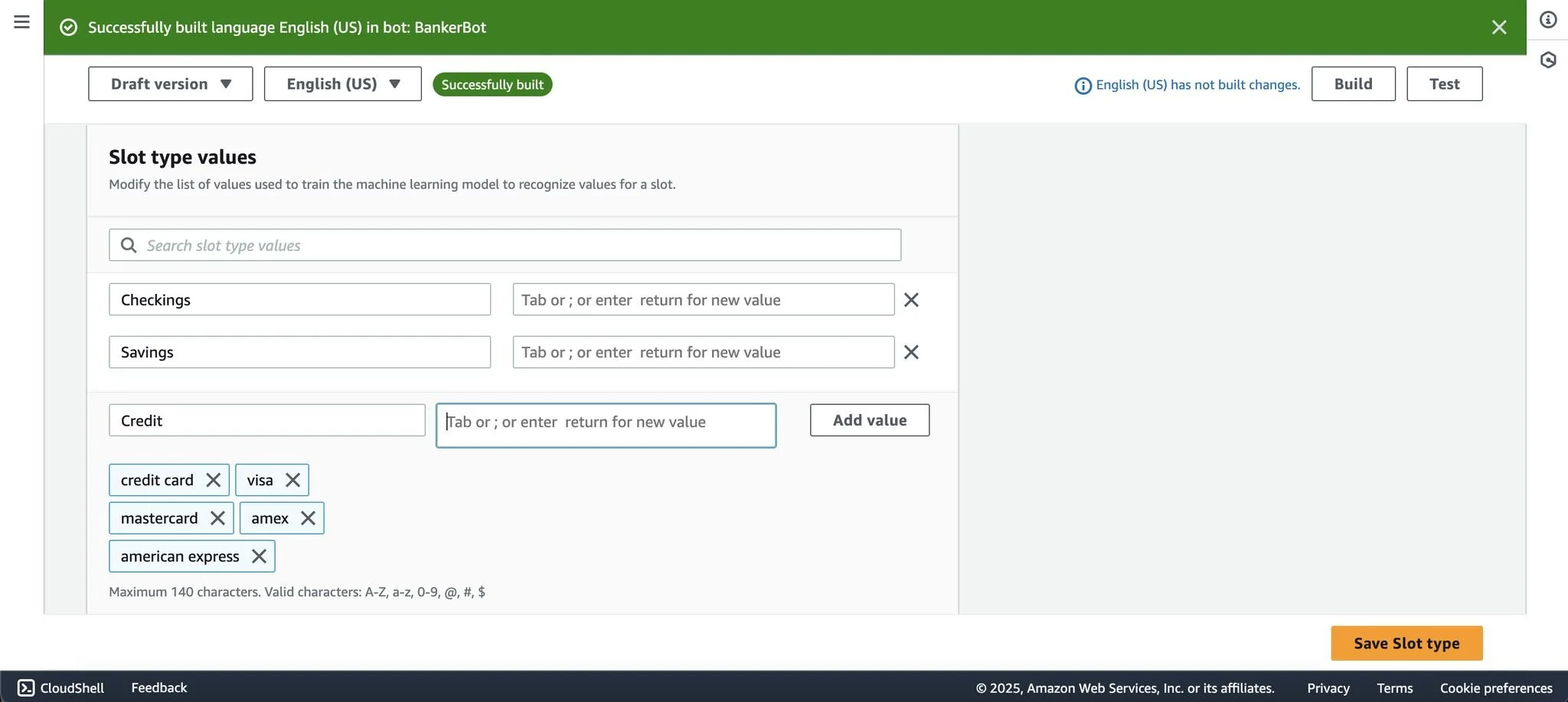

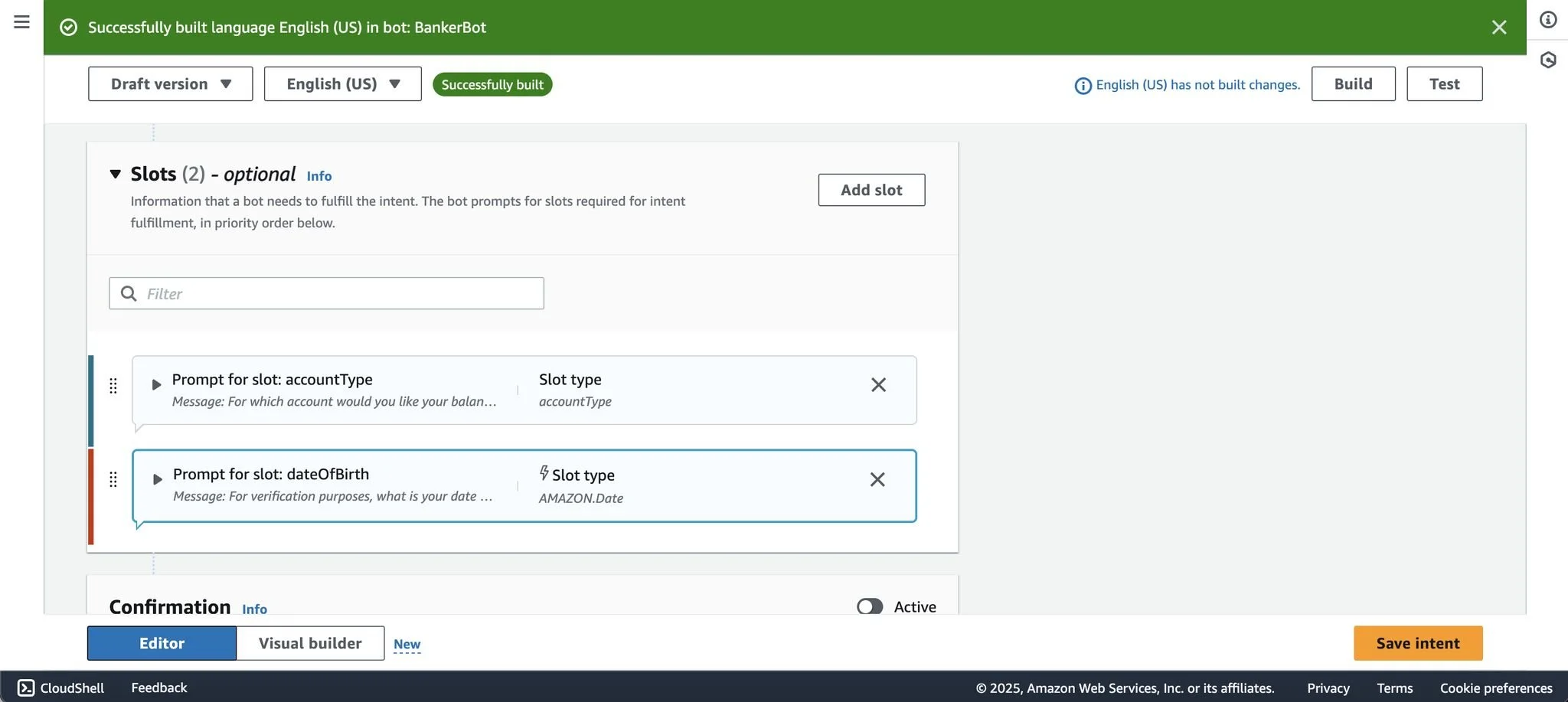

Phase 2: Enhancing the Experience with Custom Slots & User Verification

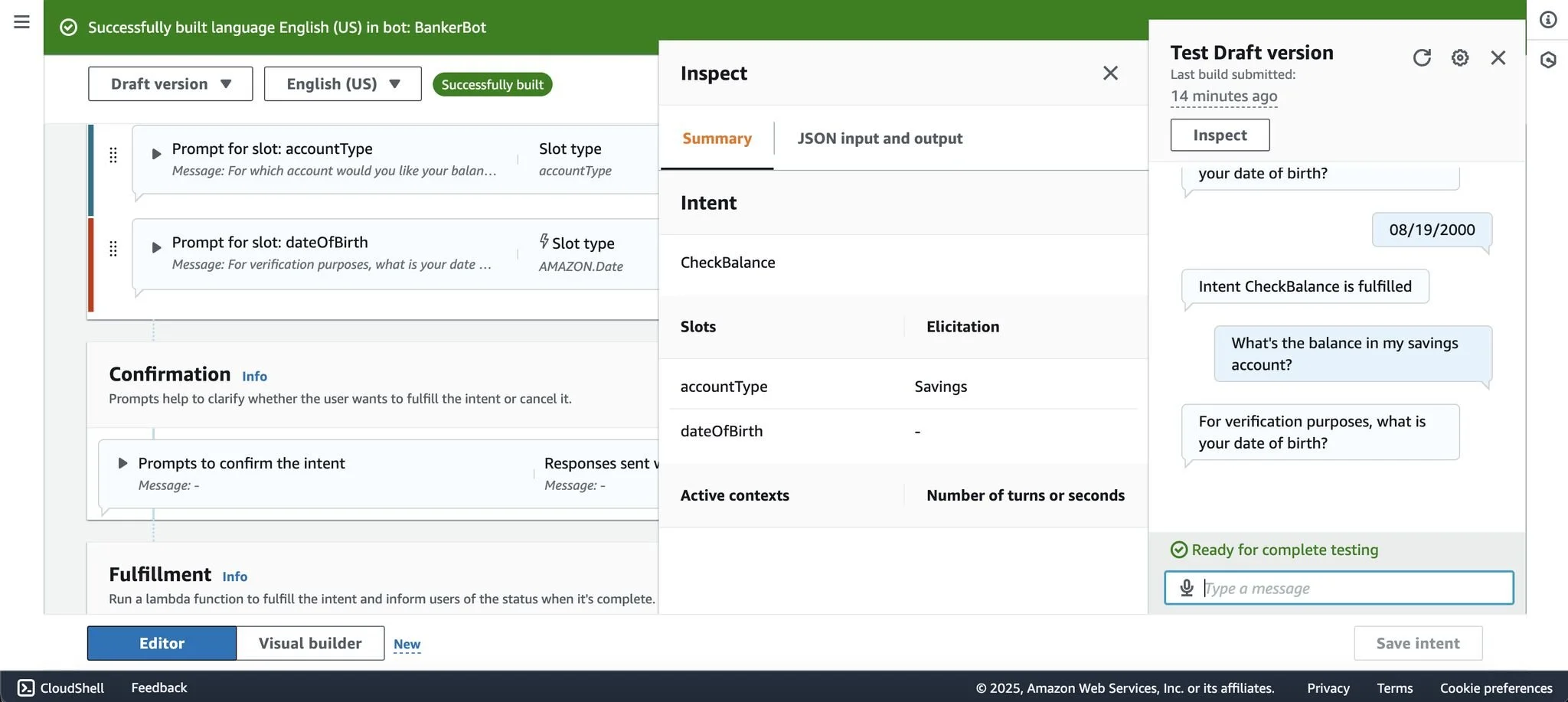

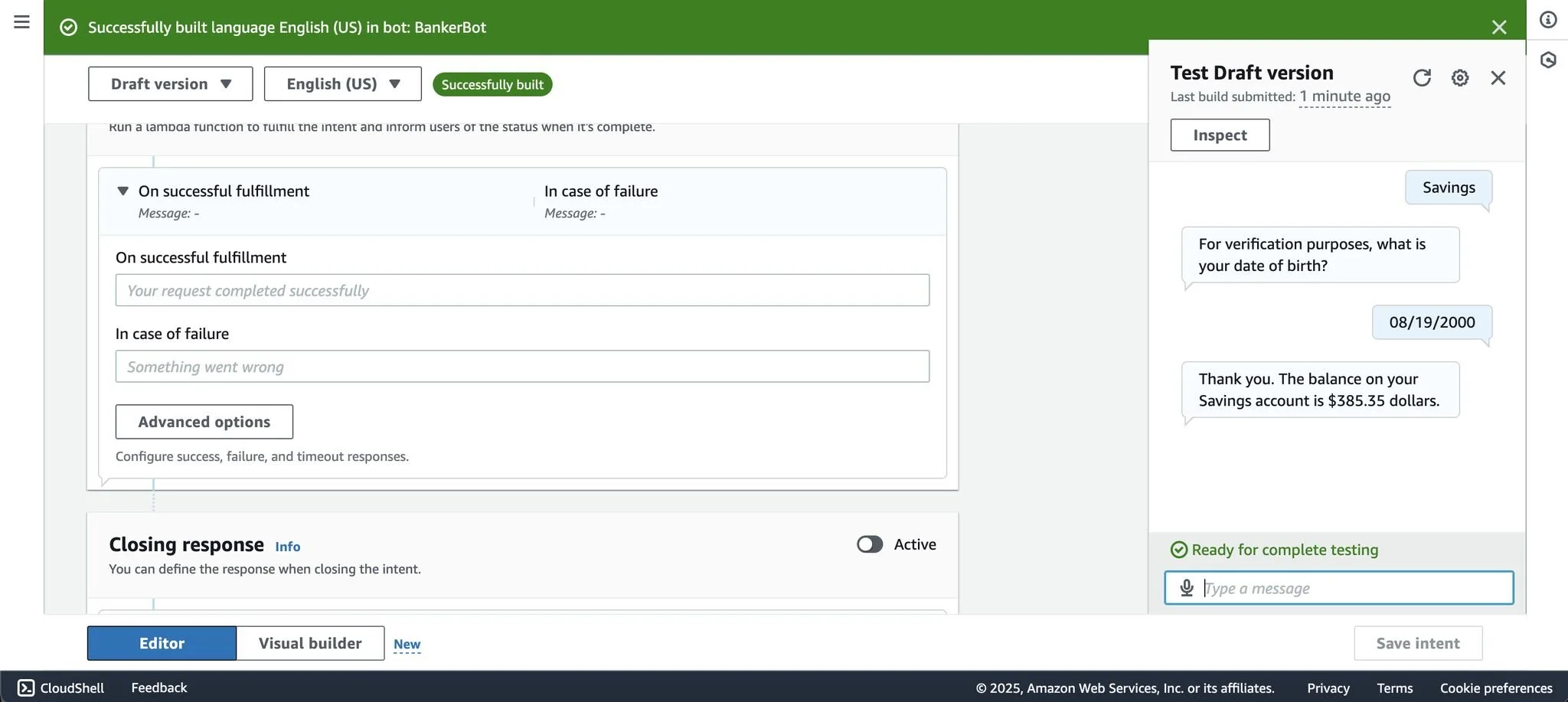

To make the chatbot more efficient and secure, I added custom slots to the intents. For the CheckBalance intent, I implemented slots for accountType (e.g., "savings," "checking") and date0fBirth. This allowed the bot to gather essential information in a structured way, reducing conversational turns and enabling user verification for sensitive inquiries.

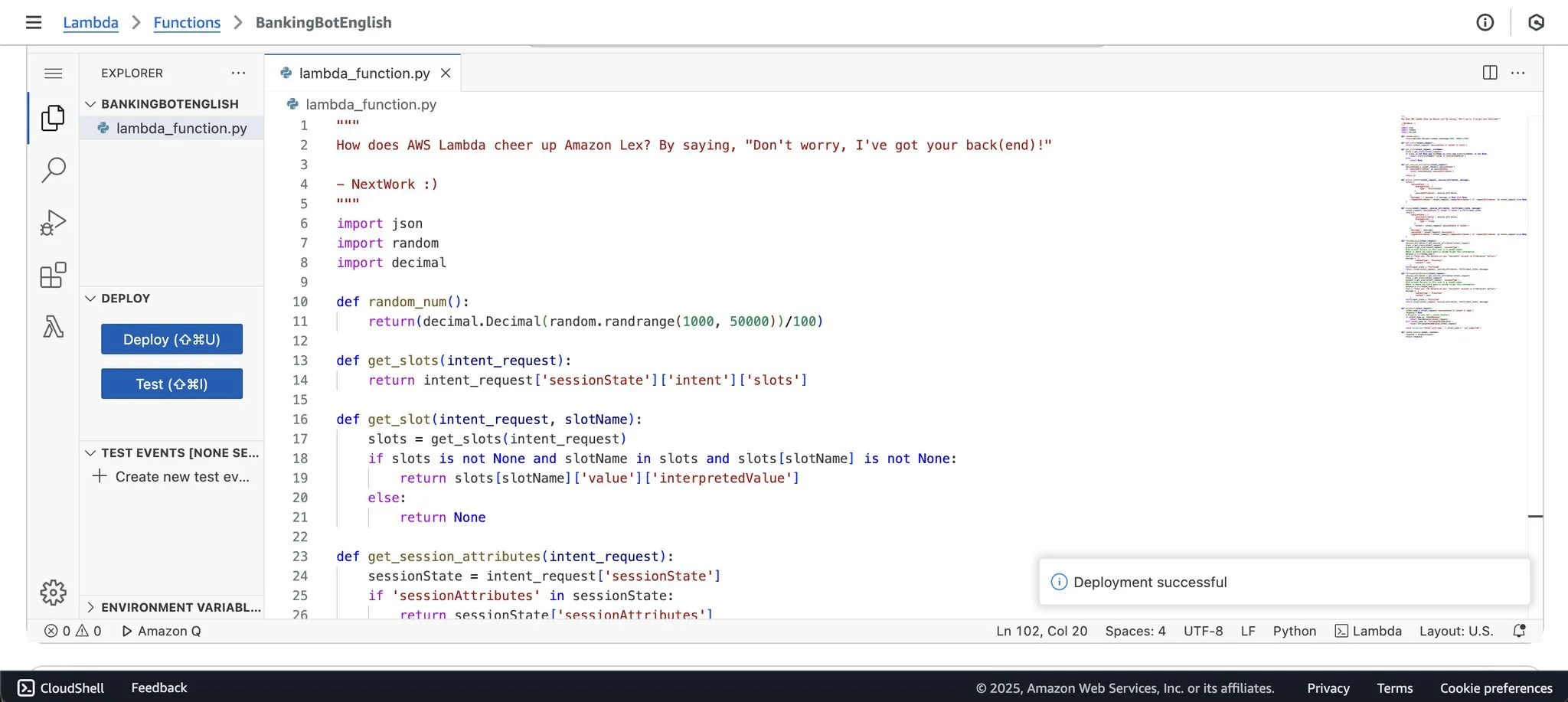

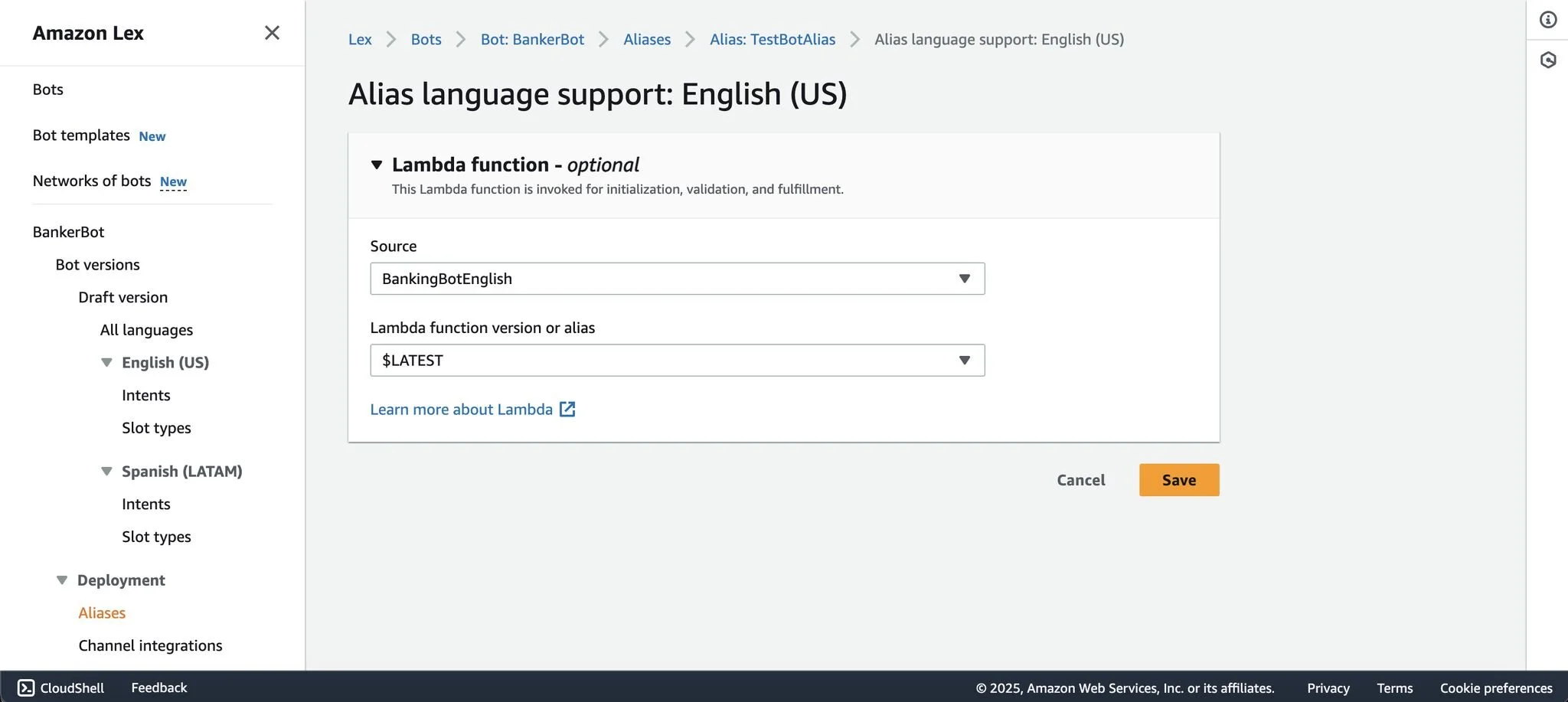

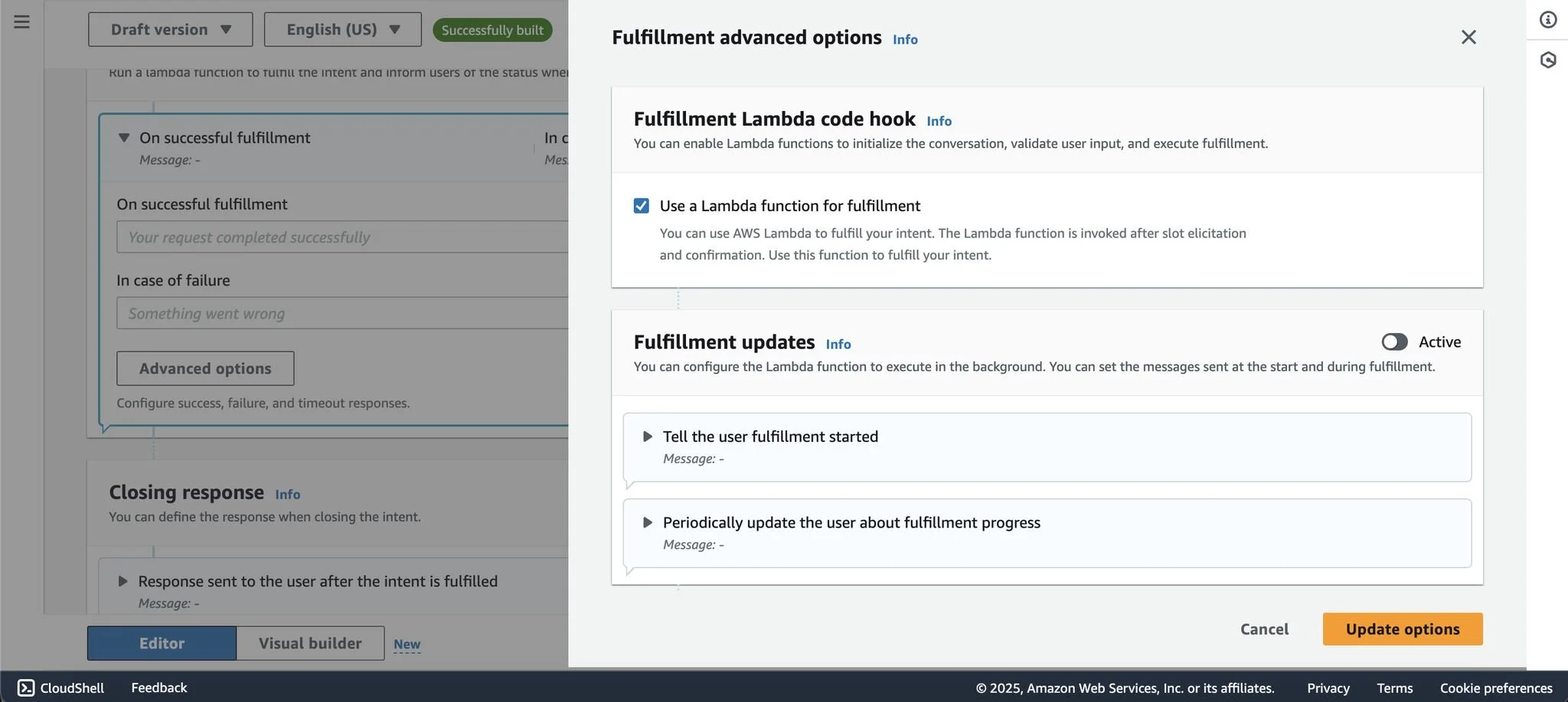

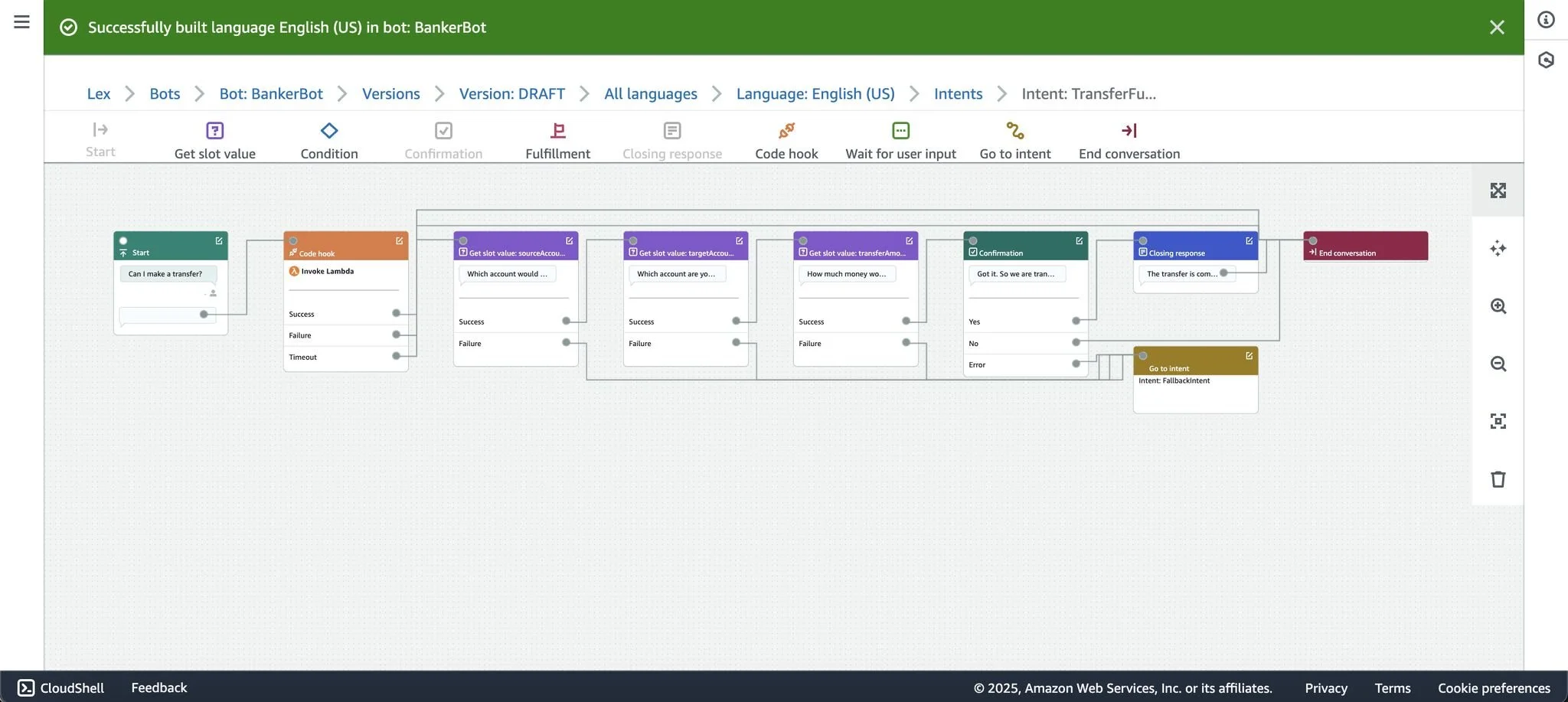

Phase 3: Integrating Business Logic with AWS Lambda

A critical step was connecting the chatbot's intents to actual business logic. I used an AWS Lambda function as a "code hook" to handle the CheckBalance intent's fulfillment. This allowed the bot to perform a complex task, generating a random dollar figure as a mock bank balance instead of providing a generic response. This integration demonstrated an understanding of how to connect an AI front-end to a back-end service.

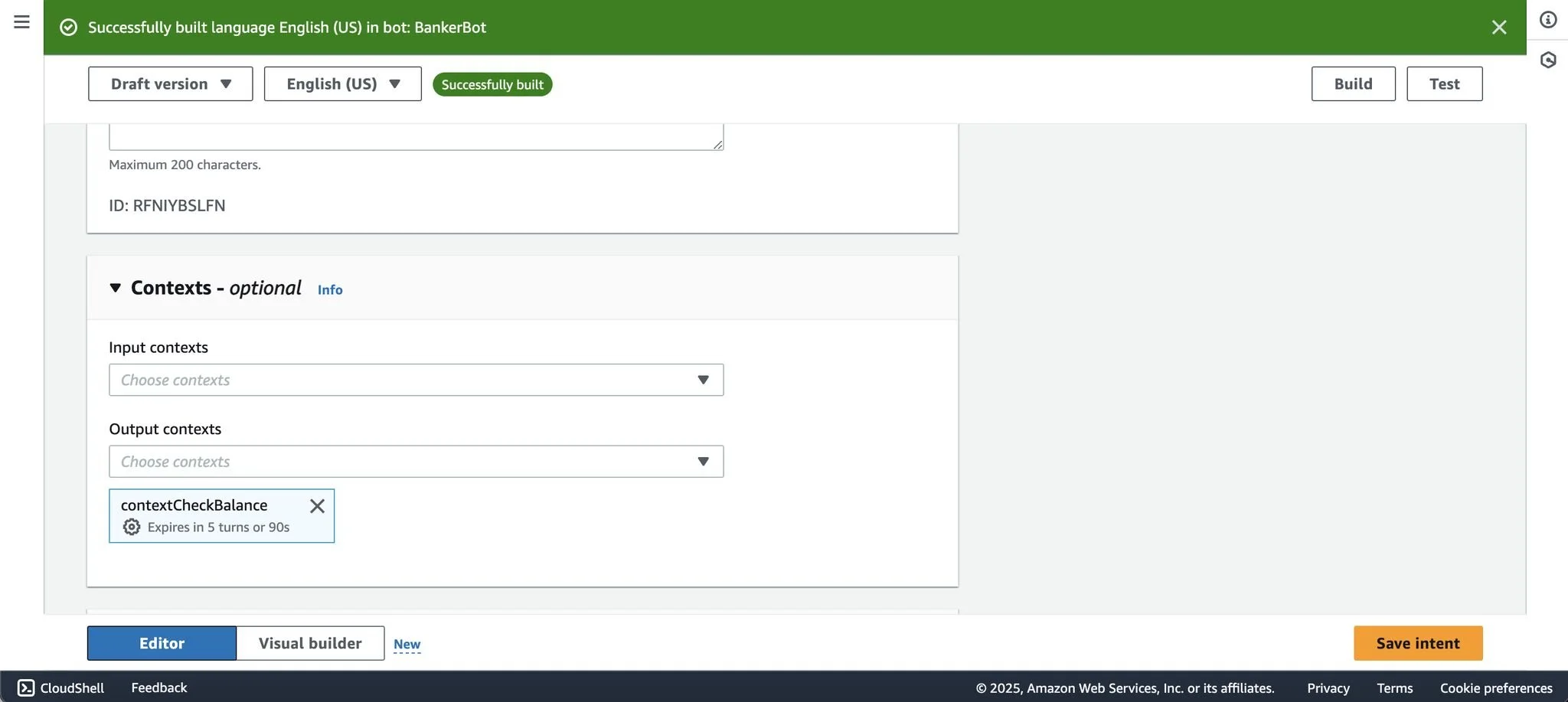

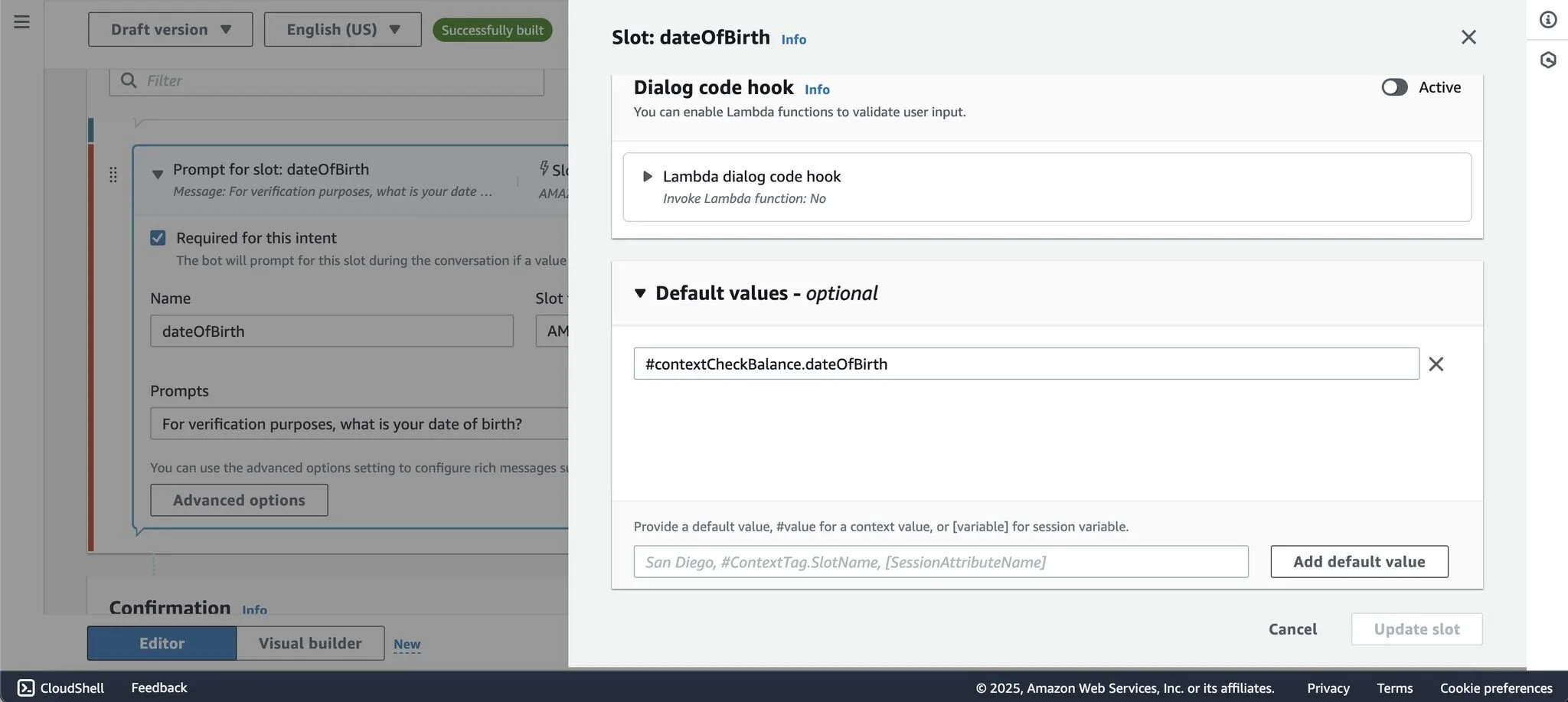

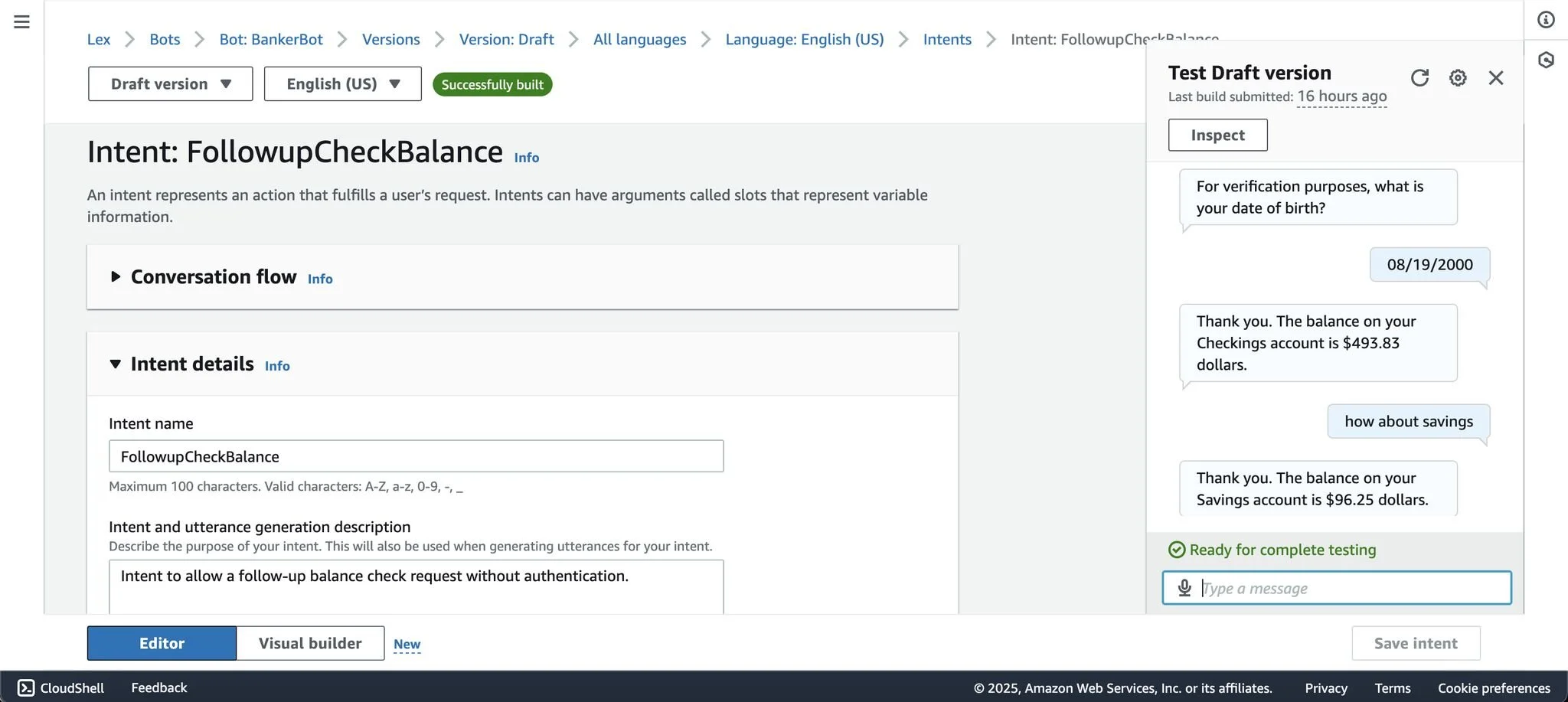

Phase 4: Creating a Seamless Conversation with Context Tags

To improve the user experience for repeat inquiries, I implemented context tags. After a user's birthday was verified for an initial balance check, the context was stored. This allowed the user to ask for a balance on another account (e.g., "what about savings?") without having to re-enter their birth date to create a more human-like and efficient conversational flow.

Phase 5: Scalability & Deployment with CloudFormation

The final phase focused on scalability and enterprise readiness. I used CloudFormation to automate the deployment of the complete BankerBot solution. This process demonstrated a knowledge of modern DevOps practices and highlighted the importance of a clear deployment strategy, including troubleshooting and resolving permissions errors to ensure the solution was fully operational and secure.

Impact & Learnings

This project resulted in a fully functional conversational banking assistant capable of handling multiple intents, verifying user information, and executing dynamic logic through AWS Lambda.

Key learnings from this project include:

The Difference Between Traditional and Generative AI: Traditional chatbots require specific inputs and lack the flexibility of generative models, a challenge that was overcome by meticulously designing intents and slots.

The Power of Integration: Connecting an AI interface to a serverless function (Lambda) is a powerful pattern for building scalable solutions that can perform complex, real-world tasks.

Thinking Beyond the Interface: A strategic approach to AI design requires an understanding of the technical architecture, security, and deployment pipelines to build a complete and viable product. This project reinforced the value of a holistic approach to design, from the user's first word to the final line of code.